Background and Motivation: Why MCP Matters Now

Generative AI, particularly Large Language Models (LLMs), has fundamentally transformed how we interact with technology. Instead of writing complex code or detailed specifications, we can now describe what we want in plain language, and AI systems handle the heavy lifting, whether that’s generating code, creating content, or producing images.

But as powerful as these AI systems are in isolation, their true potential emerges when they can interact with the real world: sending emails, querying databases, managing Git repositories, and integrating with the countless tools that power modern workflows. This is where the Model Context Protocol (MCP) comes into play.

Introduced by Anthropic in November 2024, MCP addresses a critical gap in the AI ecosystem. Before MCP, each AI application required custom integrations with external tools and data sources, a fragmented approach that limited scalability and interoperability. MCP changes this by providing a standardized, open-source framework that enables AI systems to connect with external tools and data sources seamlessly.

The protocol has gained remarkable momentum, with backing from industry leaders including Anthropic, LangChain, and Microsoft. Major platforms like OpenAI Agent SDK, Microsoft Copilot Studio, Amazon Bedrock Agents, and development tools like Cursor have already adopted MCP support. This rapid uptake by OpenAI, Google DeepMind, and toolmakers like Zed and Sourcegraph signals a growing consensus around MCP’s utility as the standard layer for agent interconnectivity.

What is MCP? Understanding the Architecture

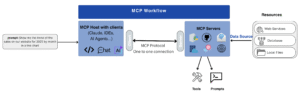

MCP operates on an elegant three-component architecture designed to facilitate secure, seamless communication between AI applications and external systems:

MCP Host

The MCP Host is the AI application that provides the execution environment for AI-based tasks. Think of it as the home base where your AI agent lives and operates. Examples include:

- Claude Desktop for AI-assisted content creation

- Cursor, an AI-powered IDE for software development

- Visual Studio Code (VS Code), can act as an MCP host, instantiating MCP clients to connect with MCP servers for functionalities like Sentry integration or local filesystem access.

- Autonomous AI agents, any application that uses an AI agent and needs to access external tools, APIs, or data sources through the MCP framework

The host manages the overall user experience and coordinates with the MCP client to fulfill user requests.

MCP Client

The MCP Client acts as the intelligent intermediary, sitting between the host and external servers. It’s responsible for:

- Request Management: Initiating and managing requests to MCP servers

- Capability Discovery: Querying servers to understand available functions and tools

- Real-time Updates: Processing notifications from servers about task progress

- Performance Monitoring: Gathering usage data to optimize interactions

- Secure Communication: Managing data exchange through the transport layer

The client ensures that the AI system can dynamically discover and utilize available tools without requiring custom integrations for each service.

MCP Server

The MCP Server is where the magic happens—it’s the bridge between the AI system and external tools or data sources. MCP servers provide three core capabilities:

Tools: Enable direct operations on external services. When your AI needs to commit code to GitHub, query a Snowflake database, or send a Slack message, tools make it happen. Unlike traditional function calling that requires multiple manual steps, MCP tools allow AI models to select and execute the right tool based on context autonomously.

Resources: Provide access to structured and unstructured data from various sources—local files, databases, cloud storage, or APIs. This enables AI models to make informed, data-driven decisions by accessing relevant information in real-time.

Prompts: Offer reusable templates and workflows that optimize AI responses and ensure consistency across tasks. These predefined prompts help maintain quality and efficiency in repetitive operations.

MCP in Action: Transforming the Software Development Lifecycle

One example is the developer community, which has been among the earliest and most enthusiastic adopters of MCP – and for good reasons. MCP tackles key pain points in the software development lifecycle by allowing seamless integration with essential developer tools. We chose this example because it provides a holistic view of the entire end-to-end development lifecycle, though MCP can be, and is, implemented in many other areas as well.

Version Control Integration

Instead of manually switching between your AI assistant and Git commands, MCP enables AI agents to interact with repositories directly. Your AI can analyze code changes, create meaningful commit messages, manage branches, and even handle pull requests, all while maintaining proper version control practices.

Database Operations

With MCP, AI assistants can connect directly to databases like Snowflake, execute queries, analyze results, and present findings in various formats. This eliminates the tedious back-and-forth of copying queries and results between systems.

Testing and Quality Assurance

MCP integrates with testing frameworks like Playwright, allowing AI agents to automatically generate test scripts, execute UI tests, and interpret results. This creates a more efficient feedback loop in the development process.

Monitoring and Debugging

Through MCP servers connected to monitoring tools like Azure Monitor, AI assistants can access logs, analyze performance metrics, and even suggest optimizations, providing developers with intelligent insights into their applications’ behavior.

Team Collaboration

MCP’s integration with communication platforms like Slack enables AI agents to participate in team workflows, sending updates, sharing results, and facilitating project coordination without requiring manual intervention.

This standardized approach means developers can focus on building features rather than managing integrations, significantly boosting productivity across the development lifecycle.

Security Challenges: The Critical Importance of Proper IAM and Authentication

While MCP opens up exciting possibilities for AI agent capabilities, it also introduces significant security considerations that organizations must address proactively.

Identity and Access Management (IAM): The Foundation of MCP Security

IAM represents one of the most critical aspects of MCP implementation. When AI agents can perform real-world operations, such as accessing databases, modifying code repositories, or sending communications, proper authorization becomes paramount.

The Current Challenge: As a relatively new protocol, MCP presents several authentication and authorization challenges that organizations must navigate carefully. The rapidly evolving ecosystem means that authentication implementations can vary across different MCP clients and servers, creating potential inconsistencies in security approaches.

In managing MCP deployments, key security concerns revolve around:

- Identity Verification: Ensuring the proper identification of AI agents when they request access to sensitive tools and data.

- Permission Management: Effectively handling permissions in environments where multiple users and agents interact with shared MCP servers.

- Policy Consistency: Maintaining uniform security policies as organizations expand their MCP deployments.

Without careful implementation, there’s a risk of unauthorized tool access or exposure of sensitive information through improperly configured agent permissions.

Progress and Solutions: MCP has made progress in addressing these challenges through the adoption of OAuth 2.1 based authorization frameworks, providing a more standardized approach to permission management. However, successful implementation requires careful consideration of:

- Granular Permissions: Ensuring AI agents have access only to the specific resources and operations they need

- Dynamic Scope Management: Adjusting permissions based on context and task requirements

- Audit Trails: Maintaining comprehensive logs of all AI agent actions for compliance and security monitoring

Authentication: Ensuring Proper Access Control

Authentication in MCP environments requires a multi-layered approach:

User-Level Authentication: Access to MCP tools should be restricted to users with appropriate permissions. For example, Git repository access should only be granted to users who are already authorized members of that repository.

Agent-Level Authentication: AI agents themselves need secure authentication mechanisms to prevent unauthorized systems from impersonating legitimate agents.

Tool-Level Authentication: Each MCP server should implement robust authentication to verify both the requesting agent and the underlying user permissions.

Security Risks in LLM-Tool Interactions

The integration of tool-use capabilities into LLM agents significantly expands their functionality, but also introduces new and more severe security risks. Obfuscated adversarial prompts can lead LLM agents to misuse tools, enabling attacks such as data exfiltration and unauthorized command execution. These vulnerabilities are particularly concerning as they generalize across models and modalities, making them difficult to defend against with traditional security measures.

Additional Security Considerations

Prompt Injection Attacks: As AI agents gain more capabilities through MCP, they become attractive targets for sophisticated prompt injection attacks that could manipulate them into performing unauthorized actions.

Network Security: MCP communications must be properly encrypted and secured, especially when traversing network boundaries or connecting to cloud services.

Monitoring and Auditing: Comprehensive logging and monitoring systems are essential to track AI agent activities, detect potential security breaches, and maintain compliance with regulatory requirements.

Security Best Practices for MCP Implementation

The Model Context Protocol represents a transformative leap forward in AI agent capabilities, offering unprecedented opportunities for automation and productivity enhancement. However, as with any powerful technology, the key to successful adoption lies in implementing robust security practices from the ground up.

Organizations adopting MCP should prioritize:

- Comprehensive IAM Strategy: Implement granular, context-aware permissions that follow the principle of least privilege

- Continuous Monitoring: Deploy real-time monitoring and anomaly detection for AI agent activities

- Regular Security Audits: Conduct thorough assessments of MCP implementations and associated risks

- Red Team Exercises: Test systems against prompt injection and other AI-specific attack vectors

The Future is Secure AI Integration

The future of AI lies in systems that can seamlessly interact with additional components of our digital ecosystem. MCP makes this future possible, but only with proper security foundations. By partnering with Nextsec.ai, organizations can confidently embrace the power of MCP while maintaining the security posture necessary for enterprise-grade AI deployments.

The Model Context Protocol isn’t just changing how AI agents work; it’s reshaping the entire landscape of human-AI collaboration. Make sure your organization is ready to leverage this transformation securely and effectively. In a world where AI agents can access your most critical systems and data, security isn’t just important; it’s essential for realizing the full potential of this revolutionary technology.